Consciousness is an ambiguous concept. It can mean a lot of different things, depending on the context. For example, I can say that I am conscious of something, as in, “I am conscious of the fact that I am currently writing about consciousness.” It can also mean self-consciousness or self-awareness: the notion that you know that you exist, and that you have a self.

These definitions of consciousness are among what the philosopher David Chalmers called the “easy problems” of consciousness. They’re not easy in the sense that we could easily figure out how the brain creates these psychological phenomena, but rather, they’re easy in the sense that it seems like neuroscience could figure out how the brain creates these phenomena. In other words, they all seem like specific psychological functions that could in principle be explained in the terms of neuroscience and psychology.

Chalmers distinguished these problems from what he termed the so-called “hard problem” of consciousness, which is the problem of how the brain can create raw, sensory experience at all. How can the brain create the redness of red, the blueness of blue, the sting of pain, or the thrill of laughter? These are all sheer, raw experiences. And the reason Chalmers calls this the hard problem of consciousness is because it seems like science couldn’t even in principle explain these phenomena. That’s because science is in the business of studying things objectively. To the extent that you can, you take subjective experience out of the equation.

To Chalmers, and to many other philosophers and scientists, it seems that there is no obvious way that science could build a bridge between objective descriptions of the physical processes of the brain and subjective descriptions of the contents of experience. Neural processes and experience seem like categorically different things. There is, to borrow another term from philosophers, an “explanatory gap” between consciousness and the brain. It just seems like we can’t explain one in terms of the other.

But Chalmers offered one interesting proposal for how we can build a bridge between the processes of the brain and the processes of the conscious mind, and that bridge is information.

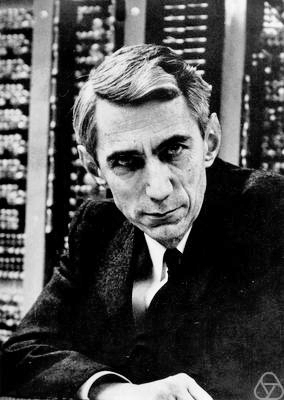

In every-day language, information is as ambiguous a concept as consciousness, but it has a precise mathematical definition. In the late 1940s, the mathematician Claude Shannon published a ground-breaking paper titled, “A Mathematical Theory of Communication,” in which he introduced a mathematical framework for efficiently sending messages over telegraph lines. His key and most transformative insight was to see the deep connection between information and the notion of entropy, which he borrowed from thermodynamics.

Entropy is a measure of disorder, or the unpredictability, of a system. Shannon realized that you need disorder in order to create information. To see why that’s the case, imagine that English had only a single word. Let’s say that word was, “Cat.” Moreover, imagine that when saying the word “cat,” you could only say it one way each time, and with the same amount of pause between each utterance of the word. So, a typical sentence in this stripped down version of English would go something like this: “Cat cat cat cat cat cat cat cat cat cat cat.”

Clearly, it would be impossible to convey any message – any information – using a language that consisted only of the word, “cat.” That’s because everything you say would be totally predictable. In other words, this language would have zero entropy. Fortunately, our languages are highly variable, containing many thousands of words that can be combined in a nearly infinite number of ways. In mathematical terms, our languages are extraordinarily high in entropy, and that’s what allows them to carry information.

With this basic insight, Shannon built up a whole mathematical framework for studying information, which transformed both modern engineering and science. Shortly after he published his seminal paper, Shannon realized that the mathematical ideas he introduced in that paper could be applied to any system across which information is transmitted, such as radios, computers, telephone lines, and, eventually, the internet. Today, his work is the cornerstone of all communications engineering.

But not long after Shannon published his original paper, neuroscientists realized that his ideas could be applied to both the processes of the brain and to the processes of the mind. And that’s what’s so remarkable about Shannon’s theory: it can describe information in any substrate, whether physical or mental.

To see how information theory can describe both the processes of the brain and the processes of the mind, let’s consider a very simple animal: the tick. The tick’s sensory world can be reduced to just three important sensory signals: the smell of butyric acid, the sense of whether or not something is 37 degrees celsius, and whether or not something has the texture of hair. These are the only signals the tick needs to detect the presence of mammalian blood, which is its food source. If the tick smells butyric acid, which is secreted from mammalian skin, it blindly drops off a blade of grass or a bush; if it lands on something at around 37 degrees celsius, which is the temperature of mammalian blood, then it got lucky and landed on its target; the tick then looks for skin that doesn’t feel hairy, and proceeds to suck blood from that patch of skin.

This sensory world of the tick is so simple that we can, for the purpose of this example, pair it down to just a 3-digit binary code. The first digit of this code would be a 1 if the tick detects butyric acid, and a 0 if not. The second digit would be a 1 if the tick detects a surface that’s 37 degrees celsius, and a 0 if not. Finally, the third digit would be a 1 if the tick detects hair, and a 0 if not. In the terms of Shannon’s information theory, this code carries precisely 3 bits of information.

And here’s the key insight for how information can bridge the brain and mind: these 3 bits of information are instantiated by both the tick’s mind and its brain. In other words, the presence or absence of butyric acid, the presence or absence of 37 degrees celsius, and the presence or absence of hair are represented in both the tick’s senses and in the neural processes underlying its senses.

From a mathematical perspective, the information structure of the tick’s mind is indistinguishable from the information structure of the neural processes that produce its mind.

This fascinating duality of information has led several philosophers and scientists to suggest that information might be the bridge between the mind and the brain, though this position has its critics.

While the philosophical nuances of this idea are fascinating, as a neuroscientist I’m more interested in the scientific potential of seeing information as the bridge between the brain and mind. If information theory will in fact help us understand consciousness, then it could (in principle) do so in a mathematically precise way – and when things can be stated mathematically, scientists can make mathematically precise predictions. If our experimental observations agree with those mathematically precise predictions, then we will have arrived at a truly scientific theory of consciousness.

There are already some findings which suggest that information theory may help bridge the gap between the brain and the conscious mind. As any anesthesiologist will tell you, a patient’s loss of consciousness always corresponds to a profound loss of entropy: if you record the brain activity of a patient being delivered anesthesia, you’ll see that as the anesthetic kicks in, brain signals start becoming highly regular and ordered, leading to a precipitous drop in entropy. And, as soon as the patient wakes up, entropy shoots back up to waking levels. It turns out that the same thing happens in other types of loss of consciousness, including dreamless sleep and seizures. Low entropy seems to be a general feature of the unconscious brain.

This is quite revealing. We know from information theory that entropy sets an upper limit on how much information a signal can carry. The higher a signal’s entropy, the more information it can carry; the lower its entropy, the less information it can carry. So, on a fundamental mathematical level, a loss of entropy during loss of consciousness means that the brain loses its ability to carry information in this state.

If you think about it, this makes sense on a subjective level. Our conscious mind is flooded with sensory information. We have no idea how many bits of information are represented in the conscious mind at any given moment, but the number is surely enormous. And when we lose consciousness, all that information disappears as our sensory and cognitive worlds fade into nothingness. In other words, anesthesia causes a loss of information in both the mind and the brain.

These observations have led to several information-based theories of consciousness, such as Giulio Tononi’s Integrated Information Theory. But truth is ultimately tested on the anvil of experiment: such theories may be beautiful, but they need to make specific and counter-intuitive predictions, and those predictions have to be tested in real brains.

Currently, researchers around the world, including myself, are working to test predictions from such theories. It’s far too early to tell if these information-based theories will be empirically successful. But, whatever the truth of the matter ends up being, it does seem likely that information theory will have some role to play in this great frontier of modern science (and philosophy). And I am both thrilled and grateful to be in the thick of it!